Overwhelmed by queries, physicians are turning to artificial intelligence to correspond with patients. Many have no clue that the replies are software-generated.

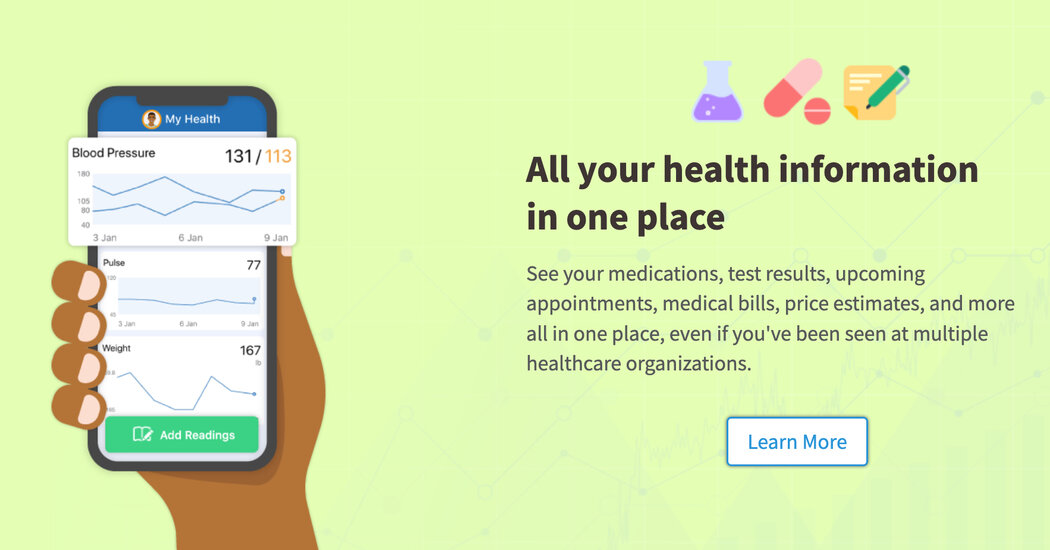

Every day, patients send hundreds of thousands of messages to their doctors through MyChart, a communications platform that is nearly ubiquitous in U.S. hospitals.

They describe their pain and divulge their symptoms — the texture of their rashes, the color of their stool — trusting the doctor on the other end to advise them.

But increasingly, the responses to those messages are not written by the doctor — at least, not entirely. About 15,000 doctors and assistants at more than 150 health systems are using a new artificial intelligence feature in MyChart to draft replies to such messages.

Many patients receiving those replies have no idea that they were written with the help of artificial intelligence. In interviews, officials at several health systems using MyChart’s tool acknowledged that they do not disclose that the messages contain A.I.-generated content.

The trend troubles some experts who worry that doctors may not be vigilant enough to catch potentially dangerous errors in medically significant messages drafted by A.I.

In an industry that has largely used A.I. to tackle administrative tasks like summarizing appointment notes or appealing insurance denials, critics fear that the wide adoption of MyChart’s tool has allowed A.I. to edge into clinical decision-making and doctor-patient relationships.